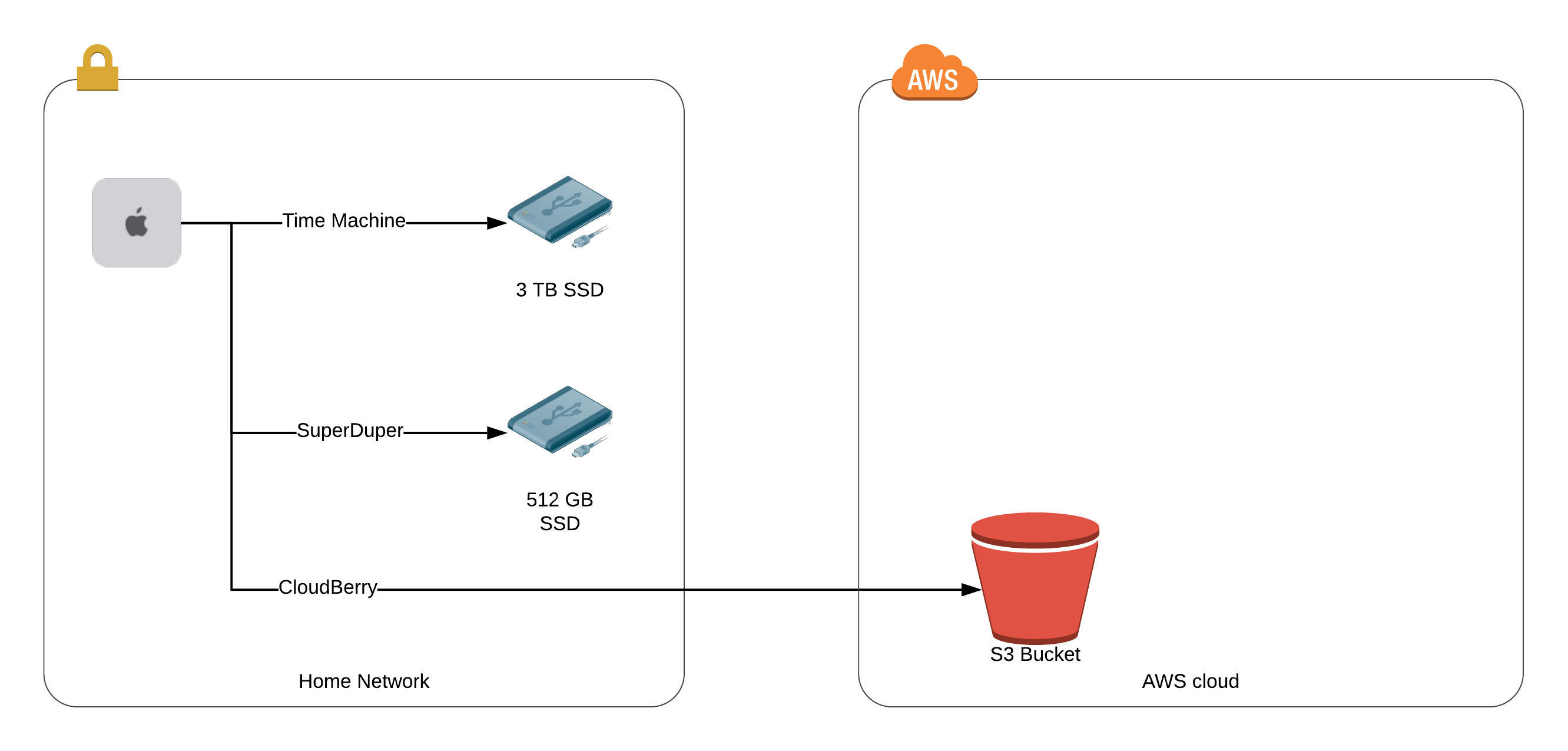

I thought since I’ve been asked a few times about my backup strategy that I would share it here. Let’s start with a diagram and then we’ll walk through it.

My Device

My daily driver is a 2018 Mac mini and I am all-in on the Mac ecosystem. My backup solution is tailored to macOS from a home with a high-speed internet connection.

First Level Backup - Drive Imaging

What I wanted to solve here is a way to get back up and running if my internal disk suddenly fails even if all the data isn’t completely up-to-date. To solve this, I turn to a bootable disk image.

To accomplish this, I need two things. 1) An externally attached disk and 2) the software to mirror my internal drive and make the copy bootable.

The drive that I’m using for imaging is a Samsung T5 Portable SSD - 500GB. You need a drive that is the same in size to your internal storage and nothing larger. There is no value in buying a larger size drive. So, don’t bother spending the extra money. I decided to go with an SSD rather than a spinning disk for the mirroring for speed and because SSD’s have greatly dropped in price.

I am not sure when I first turned to SuperDuper, but it has to have been over a decade. I needed a way to image a drive for an earlier version of macOS. I did a Google search and found Shirt Pocket and their software; it worked flawlessly for my needs. Eventually, I moved beyond the free version which opened up a scheduler, Smart Update, few other extra features that I haven’t yet used. The scheduler is needed to set up automatic nightly updates and the software’s Smart Update feature allows the daily updates to run without reimaging the entire disk each time greatly speeding up the processing time.

Now that I have the drive and the software to create the bootable disk image copy, the only thing left is to set up the scheduler. I run nightly at 4:00 AM. Within 2-3 hours, it is complete and have a bootable copy. Of course, 4:00 AM may not be the best time for you. Choose an overnight time when you will not likely be around as the software does slow when backing up.

At this point, I have a bootable disk image updated daily. I could just stop here and have a pretty good backup solution.

But I didn’t…

Second Level Backup - Hourly Incremental

In addition to the daily bootable backup with SuperDuper, I run Time Machine backups. Time Machine is Apple’s built-in archive solution that keeps not only a complete disk backup but incremental hourly updates. It even goes a bit further by keeping multiple versions of archived files. The versioning, though, is merely a bonus. If you accidentally saved some changes to a Pages document and then realized you wanted the version from 12 hours ago, you’ve got that option. This versioning is not something to depend on like svn or git, however. As backup disk space runs out, older versions and backends are automatically truncated to keep the full backup in place.

The backups work as such: hourly for the past 24 hours, then daily for the past month, then weekly thereafter. The amount of older data Time Machine keeps is based on the Time Machine volume size. Here is where you want 3x or more storage for the drive and not equal size to your disk. Since, again

Why did I go with Time Machine when there are many other choices out there? What am I trying to achieve with the second level backup?

- Easy to implement

- Not expensive $$

- Hourly updates

- Good level of backups (hour, daily, weekly, monthly)

- Locally stored

- Under my control

Time Machine comes free and out of the box with MacOS. Its default configuration provides hourly, daily, weekly and monthly backups. It is a few clicks to set up. The data never leaves my desk.

There are a couple of choices on what type of drive to use or how to connect. I went with a Toshiba HDTB330XK3CB Canvio Basics 3TB USB drive that I had lying around and connected it to the 2018 Mac mini directly. If you have more machines to backup, a larger disk on the local network might the better choice, but since I had the drive sitting around, it worked for me.

Now, what about off-site backups?

Third Level Backup - Cloud Backup

The one thing I don’t have at this point is an offsite backup. What I wanted here was an easy tool to backup my most important files to the cloud.

The first question I had to answer was what files do I want to upload to a cloud hosting service? Since cloud storage has a monthly storage cost attached to it and my ISP’s upload speed is not nearly as good as my download speed (For those of you wondering, I get about 400/25), I want to be picky about what gets backed up remotely.

I chose the following folders to backup:

~/Desktop

~/Documents

~/Pictures

~/Important\ Stuff

I’d recommend adding any other folders of user-created content that can’t be retrieved if your house burns down. This doesn’t mean your Applications folder since you can restore that from Apple’s Mac App Store or from downloading again from the publisher’s website.

The next question is where do I put all this important stuff? I chose Amazon Glacier. It is cheap long term storage in Amazon’s cloud with high durability. It is completely under my control and I can decide if I wish to encrypt the data with my keys before uploading or not. As for price, I think I pay somewhere between $5-6 per month for multiple TBs of data. For full pricing, take a look at Amazon’s pricing page.

The final question I asked was what tool do I use to get this important to stuff to Amazon Glacier? I found Cloudberry Backup for Mac to solve this problem. Why did I choose Cloudberry Labs?

To use Steve Gibson’s phrase of TNO: Trust No One approach to security, this software package ticks off the boxes.

- The software leaves me in control of what I am backing up and to where that data is going.

- I choose the compression

- I choose the encryption at rest

- and it allows for HTTPS/TLS encryption during transit

- I choose the final cloud destination be it S3/Glacier, Google Cloud or Azure.

Beyond that, the software is a breeze to configure but if you run into issues, Cloudberry’s support pages or contacts will solve your unique issues.

Notes

I didn’t include a HOW-TO with pretty screenshots to implement these backup options but just described the strategy here. If anyone wants a step-by-step, let me know in the comments and I’ll work on it. There are already many good references for each of these applications out there and I don’t think I could improve upon them.

Warning

Please, test your backups with restores.

- Boot from the SuperDuper drive. Does it boot correctly with all the data seemingly in place?

- Open up and dive into Time Machine. Look at some file history. Grab a file. Can you open and read it?

- Login to S3 and verify the files exist in Glacier. Pull a file back? Can you open and read it?

Taking backups is fantastic but if you can’t get the data back because of corruption, backups are pointless.

I would suggest testing at least every six months to confirm all is healthy.

Conclusion

This three tiered backup implementation might be overkill for most, but it gives me the peace of mind I need and provides an optimal solution to get back up and running quickly in the event of various types of data loss.

You may decide to just go with the bootable disk image, just time machine, or just an online solution like Carbonite or Blackblaze. You may pick two of the three. You may be paranoid like me and do all three. Start with one and increase based on your needs.

Happy Backups!